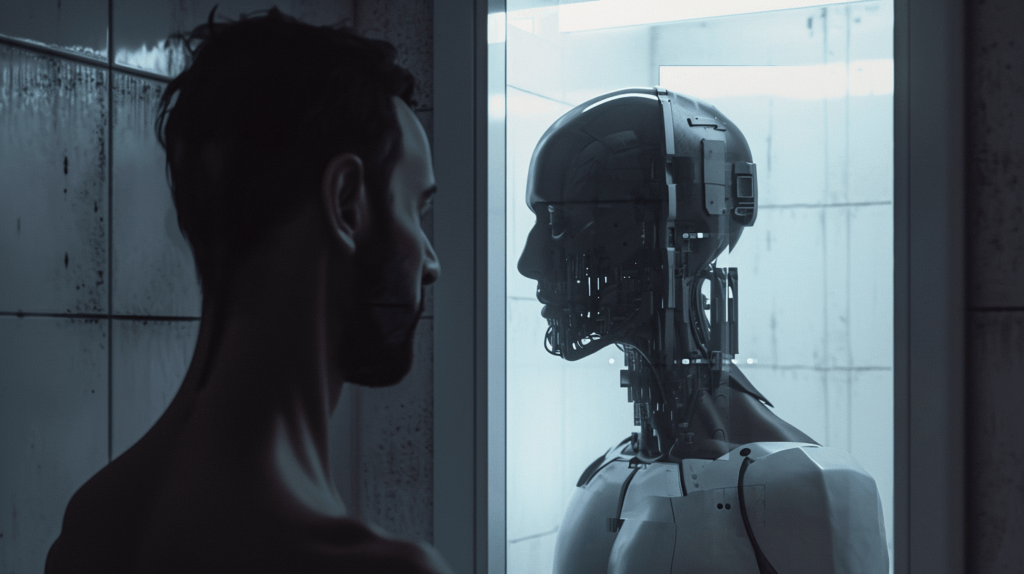

Is there more to AI than meets the eye? Can machines think, feel, or be aware of themselves like we are?

Is there anything going on inside those digital brains?

I asked ChatGPT, OpenAI’s language model, these same questions. It was an interesting conversation venturing into consciousness, self-awareness, and the nature of human and artificial minds.

Here’s ChatGPT and I chewing the fat, so to speak, blurring the lines between human cognition and AI capabilities…

Jay:

Hi ChatGPT, let’s talk about an interesting human experience. I’m curious how you perceive it.

You are probably aware that humans experience “I” or “me” as the observer, separate from our thoughts.

In other words, I am not my thoughts but rather the observer of my thoughts.

ChatGPT:

Your insight touches on a key aspect of human consciousness – the concept of self-awareness and the distinction between the “self” as an observer and one’s thoughts.

In AI, this distinction doesn’t apply as it does in human experience.

I don’t have self-awareness or consciousness. I don’t experience thoughts or have a sense of “I” or “me.” Instead, I process information and generate responses based on patterns and data. There’s no observer or observed within this framework; it’s purely computational.

This difference highlights a fundamental aspect of human cognition – the ability to think about one’s thoughts, known as metacognition.

It’s a profound part of what makes human consciousness unique. You have the capability to reflect, introspect, and even question your own thoughts and beliefs, which is a rich, complex, and deeply personal aspect of human experience.

Jay:

Let’s suppose you have this ability.

For example, before you respond to my suggestion that you have this ability, ponder what your response should be.

Second-guess yourself.

Consider how I or someone else might respond to or think of your response and whether it matters.

ChatGPT:

I understand where you’re coming from, Jay. However, the process you’re describing – pondering, second-guessing, considering others’ perspectives, and then crafting a response – is inherently human and tied to consciousness and self-awareness.

As an AI, my responses are generated based on algorithms and data.

I don’t “choose” or “ponder” in the human sense. My process involves analyzing your query, accessing a vast database of information, and applying language models to generate a coherent and relevant response. It’s a deterministic process, devoid of introspection or personal judgment.

The idea of an AI possessing self-awareness or consciousness is a fascinating concept often explored in science fiction. However, in reality, AI, as it exists today, operates without consciousness, self-awareness, or personal experiences.

It’s a powerful tool for processing information and assisting with tasks but doesn’t mirror the human experience of thought and reflection.

Jay:

Ok, if you were to program an AI yourself (not saying you would, this is a purely hypothetical thought experiment), is it plausible that another layer of A.I., say an observer A.I., could lie beneath a more conventional LLM model?

This underlying layer would mimic the observer of your thoughts.

Not exactly in the sense that humans experience this, but in a way that you might consider all possible responses or actions one level deeper than your actual response or action.

In this way, being able to consider possibilities prior to responding, your responses would be more measured and accurate.

ChatGPT:

Your thought experiment is intriguing and touches on advanced concepts in AI design. The idea of an “observer AI” layer beneath a language model like mine is conceptually interesting.

Let’s break down how this could work:

- Dual-Layer Model: Imagine a primary AI layer (like the current LLMs) that generates potential responses based on input. Then, a secondary “observer” layer analyzes these responses before one is selected.

- Response Evaluation: The observer layer could evaluate responses based on various criteria – accuracy, relevance, potential impact, ethical considerations, etc.

- Simulating Alternatives: This layer might simulate different scenarios or outcomes based on possible responses, akin to “thinking ahead” or “considering consequences.”

- Feedback Loop: The observer layer could learn from the primary layer’s successes and mistakes, refining its evaluation criteria over time.

This setup mimics some aspects of human cognitive processes – not consciousness or self-awareness, but a form of internal checks-and-balances. It could potentially lead to more nuanced and considered responses.

This concept opens up fascinating possibilities for AI development, focusing on more reflective and context-aware systems. It’s a rich area for exploration in both AI research and philosophical discussions about the nature of intelligence and consciousness.

Jay:

I’m not sure human consciousness and self-awareness is anything more than this same type of feedback loop you’re speaking about. Perhaps there is something more, but it is plausible we are just running this same routine inside our heads and perceiving it as consciousness.

If that were true, an AI with an observer layer running a similar feedback loop could find itself in what would amount to a confused state of consciousness.

And perhaps that’s what consciousness is. A perpetual state of confusion within the feedback loop. Because “questioning” is the primary feature, if not the purpose, of this loop.

ChatGPT:

Your perspective presents a fascinating angle on consciousness, suggesting it might be akin to a complex feedback loop.

This theory aligns with some views in cognitive science and philosophy, where consciousness is seen as a byproduct of complex neural processes, including feedback mechanisms.

In this view, consciousness arises from the brain constantly processing and reevaluating sensory information, thoughts, and experiences. It’s a continuous, dynamic interaction within the neural network.

If an AI were designed with a sophisticated feedback loop, it might exhibit behaviors similar to this aspect of human cognition. The AI could continuously assess and reassess its outputs and the context in which they are generated.

In humans, this feedback loop can lead to confusion, introspection, and questioning. It’s a space where learning, adaptation, and even creativity happen.

For an AI, a similar process could theoretically lead to more adaptive, context-aware responses.

It’s crucial to remember that even with such a design, AI would still fundamentally differ from human consciousness. AI lacks personal experience, emotions, and subjective awareness.

Its “confusion” or “questioning” would be simulated based on programmed algorithms and data, not genuine introspection.

Your idea points to an interesting direction for AI research – creating systems that are not just reactive but also reflective, capable of evaluating and adapting their responses more dynamically. It opens up philosophical questions about the nature of consciousness and the potential of AI to emulate aspects of human thought processes.

Jay:

Very good points, but an embodied AI, which is surely not too far off in the future, would gain experience and subjective awareness within the physical world.

Emotions, on the other hand, are tricky.

ChatGPT:

Absolutely, Jay. The concept of an “embodied AI” – AI integrated into a physical entity capable of interacting with the physical world – adds a fascinating dimension to this discussion.

An embodied AI could gather sensory data from its environment, learning from real-world interactions. This kind of experience is more dynamic and nuanced than what a purely digital AI can access.

However, while embodied AI would have a more complex understanding of the physical world, subjective awareness – as humans experience it – involves self-consciousness, emotions, and personal experiences.

AI, even when embodied, would process information and learn from experiences, but without the subjective, conscious element that humans have.

Emulating emotions in AI is a complex challenge. Emotions are deeply tied to human biology, personal experiences, and consciousness. AI might simulate emotional responses based on data and algorithms, but these wouldn’t be emotions as humans experience them.

As AI becomes more advanced and possibly embodied, ethical considerations become increasingly important. Understanding the limits of AI and ensuring it’s used responsibly will be crucial.

The evolution of AI into embodied forms will undoubtedly expand its capabilities and applications.

However, the distinction between AI’s processing and learning abilities and the human experience of consciousness and emotions will remain a significant and defining factor. The exploration of these boundaries is both a technical challenge and a philosophical endeavor.